Spinning Fire: A Guide to Steel Wool Photography

I think steel wool photography creates some of the most amazing images you can capture

Share the Love This Valentine’s Day – 25% Off

In 2019, Sony’s alpha 9 camera changed the game by shooting 20 frames per second without any pause. This was a huge leap forward, thanks to new technology. It’s now changing how we take pictures.

This new tech uses a smart design based on the backside-illuminated (BSI) idea. Cameras now have the image processor and fast memory in one piece. This makes data flow smoothly, cutting down processing time.

This means your camera can read sensor info much quicker, improving dynamic range. No more waiting for your shot to appear in the viewfinder. It’s a big win for sports and wildlife photographers, who deal with fast moving subjects.

In this guide, you’ll learn how these systems work. You’ll see why they’re important for all kinds of photographers.

Stacked sensor cameras are a big step up in camera tech, especially for those who demand high dynamic range. They have a layered design that boosts performance. Unlike old sensors, they stack their parts in a 3D way for better efficiency.

At their heart, these cameras use a special CMOS sensor. This sensor has layers of parts stacked on each other. This design makes the camera more compact but powerful.

The first stacked CMOS sensor came out in 2012. It was a big change in how sensors are made, and it’s still getting better.

Old cameras have sensors where photodiodes and circuitry are side by side. This limits how much light they can capture because the circuitry takes up space.

Stacked sensor put photodiodes on top and circuitry below, optimizing performance. This lets them capture more light, making them more efficient.

Traditional sensors move data horizontally, which slow things down. Stacked sensors move data vertically, making it faster.

Pro-Tip

A stacked sensor camera has different layers for different jobs. The top layer has photodiodes that turn light into signals. These photodiodes can be bigger, catching more light.

The layer below has transistors for reading and boosting signals. This design is different from old cameras where their circuitry wraps around photodiodes.

The bottom layers have memory and processing parts. These help the camera work faster, supporting quick image capture and 3D mapping.

Thousands of tiny connections called through-silicon vias (TSVs) link the layers. These connections are key to the camera’s speed and efficiency.

The stacked design makes devices thinner without losing performance. Since the start, Sony and others have made these sensors thinner, thereby helping smartphones getting slimmer.

Speed is another big plus. Stacked sensors can read data faster, allowing for faster shutter speed and better video.

They also do better in low light. With more space for light capture and less noise, they take cleaner photos in tough lighting.

The design also lets each layer be optimized separately. This means better performance without making the camera bigger. This is super useful in small devices like phones and action cameras.

Looking to stretch your budget? We’ve got good news! Save an additional 10% use code bwild10

Every stunning image from a stacked sensor camera uses a complex technology. It combines optical science with advanced computing. This new camera design changes how light is captured and processed.

Understanding stacked sensor technology shows why these cameras perform well in tough shooting conditions. They deliver impressive results.

In a stacked sensor camera, the sensor captures light and processes it. The photodiodes are at the top, capturing light with little blockage. This setup lets each pixel gather more light. More light means better images with finer details and less noise.

This design started with Sony’s 2017 camera sensor innovation, paving the way for the latest full frame and APS-C cameras.

When light hits the photodiodes, it turns into electrical signals. These signals are then passes to the processing layers.

This is a brand-new system with DRAM memory built right into the sensor stack. This memory can be used as a quick backup. A lot of picture data is stored before it is processed. Memory can now acts as a fast buffer. It can store more image data before processing.

This setup lets cameras do complex tasks like noise reduction and HDR compositing. Using machine learning algorithms for these tasks. This makes the camera work fast and more efficiently.

| Processing | Traditional Sensor | Stacked Sensor | Advantage |

|---|---|---|---|

| Light Capture | Photodiodes share space with circuitry, | Dedicated photodiode layer | Up to 2x more light information |

| Data Transfer | Sequential readout to external processor | Parallel transfer to integrated memory | 10-20x faster data throughput |

| Image Processing | Primarily handled by a separate processor | Initial processing on-chip with ML support | Reduced latency and real-time analysis essential for capturing 6K video. |

| Computational | Limited by data transfer bottlenecks | Enhanced by high-speed buffer memory | Advanced algorithms in real-time |

Stacked sensors offer many benefits. They have a much faster readout speed. This means you can shoot continuously without the viewfinder blackout.

They also have less rolling shutter effect. This is ideal for sports, wildlife, and action photography.

Low-light performance is better as well. The sensors gather more light, making images cleaner in dark conditions.

On-chip processing lets cameras do advanced tasks. This includes autofocus tracking and subject recognition.

This design also uses less power. Cameras with stacked sensors can work efficiently and still produce outstanding photos. They last longer using less battery.

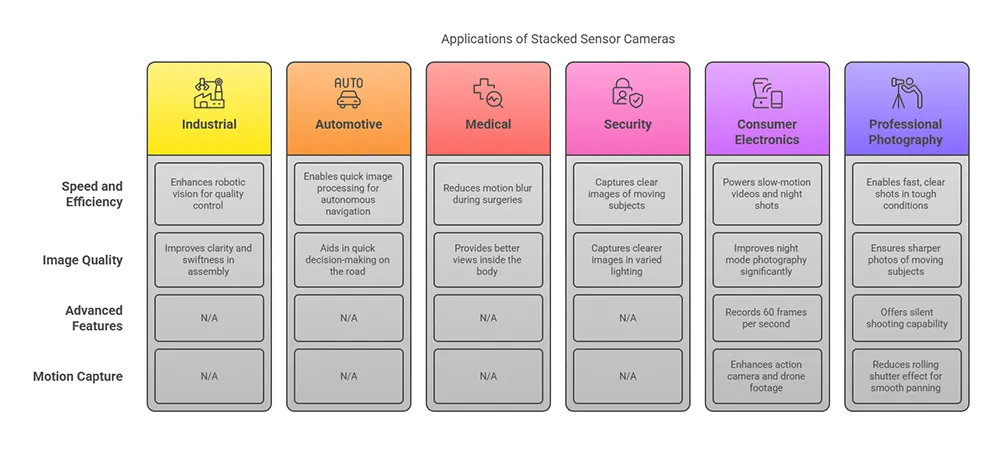

Stacked sensor are changing how we see and process images. They are key in many fields because of their speed and ability to handle complex scenes. Their speed makes them perfect for places where older cameras can’t keep up.

Stacked sensors hold significant importance in the industrial world. They help robots see clearly and swiftly, making quality checks and assembly work better.

The car industry also loves cameras stacked sensors. They help self-driving cars see and move around by processing images quickly. This is crucial for making fast decisions on the road.

In medicine, these sensors help doctors see more clearly during surgeries. They reduce motion blur, giving surgeons a better view inside the body.

Security systems also use these sensors. They capture clearer images of moving people in different light conditions.

Now a days your smartphone might have a stacked sensor. Giving you access to make cool features like slow-motion videos and better night shots.

Expect to see Smartphones with the ability to record 60 frames per second. This lets you see details in motion that were difficult to catch before.

Night mode photography has also improved thanks to stacked sensors. They can now combine multiple exposures quickly, making dark photos bright and clear.

Action cameras and drones also use stacked sensors, which enhance their digital camera capabilities. They help capture smooth footage during fast activities. This technology is excellent for filming moving subjects or capturing those fleeting moments.

Professional photographers, who shoot sports and wildlife, will prefer stacked camera sensors. They make fast and clear shots possible in tough conditions.

High-end cameras can now continuously shoot 30 frames per second. This is a big deal for capturing the right moment in sports and wildlife photos.

These cameras also focus faster and more accurately. This means sharper photos of moving subjects.

Wedding and event photographers benefit from the cameras’ silent shooting. Letting them take photos without disturbing the scene. It’s perfect for quiet moments during ceremonies or shows.

Videographers also prefer these cameras for their smooth panning shots. The reduced rolling shutter effect making fast-moving scenes look natural. This is important for high-end video work.

Before we dive into stacked sensor camera tech, let’s look at its positive points and downsides. Knowing these will help you decide if it fits your needs or vision.

Their main plus is how fast they read data. Unlike old sensors, they can process the whole frame quickly.

This rapid readout cuts down on the rolling shutter effect. They also grab more light, making photos clearer in the dark. You’ll see less noise in low-light shots. This means more detail in both shadows without the noise.

Despite their cool features, stacked sensor cameras have some downsides. One big issue is the bigger files they make. These high-speed sensors need more storage space.

Handling these big files takes more power. You might need a faster computer or wait longer to edit footage.

They can also get hot, which is a problem when recording lots of video. This heat might cause the camera to shut down to prevent damage.

Their complex design can lead to more failures. making them less reliable and pricier to fix over time.

The advanced design makes them pricier. Cameras with stacked sensors cost 30–50% more than those without. This can be a big difference, mainly in the pro and high-end markets, especially for mirrorless and DSLR cameras.

Whether the extra cost is worth it depends on your needs. If you are interested in fast action photography, low-light shots, or computer vision applications, the extra cost might be justified. But for casual photography, a traditional sensors might be better.

| Feature | Stacked Sensor | Traditional Sensor | Impact on Usage |

|---|---|---|---|

| Readout speed | Extremely fast (1/1000s or better) | Moderate to slow (1/30s to 1/200s) | Critical for action photography and object detection |

| Low Light | Superior with less noise | Variable, often with more visible noise | Important for evening/indoor shooting |

| Heat Generation | Higher (potential for overheating) | Lower (better for extended use) | Affects continuous shooting and video recording |

| Cost | Premium (30-50% higher) | More affordable | Determines accessibility for different user groups |

| File Size | Larger files requiring more storage | More manageable file sizes | Impacts storage requirements and workflow speed |

When choosing between stacked or traditional sensors, think about your needs and budget. For a pro the benefits might be worth it. But for casual photographer, traditional sensors could be a better deal.

...Bob

Single-stacked sensors have been around for a while, but now mirrorless designs are emerging. But double-stacked designs are new, like in the Sony Xperia 1 V, released in May 2023. This shows we’re just starting to see what stacked sensors can do.

This technology is still in its early days, with lots of room to grow. We see it mainly in professional cameras and high-end phones. But as making these sensors gets better and cheaper, we’ll see them everywhere.

The next step for stacked sensors is more layers. We’ll see sensors with more than two or three layers. These will do more and work better, thanks testing and advancements in design.

These sensors will also have special units for machine learning. This means your camera can do more without needing to send data elsewhere. It will work faster and use less power.

Another big change is combining stacked sensors with lidar technology. This will make cameras see depth better. Your phone’s portrait mode will get better, and robots will understand their space better.

As technology gets smaller, cameras, including APS-C cameras will get more powerful. Who knows soon, you’ll have a professional camera in your pocket. It won’t be big or heavy, but it will still take amazing photos.

| Feature | Stacked Sensors | 3 years | 5+ years |

|---|---|---|---|

| Layer count | 2-3 layers | 4-5 layers | 6+ specialized layers |

| Processing capabilities | Basic on-sensor processing | Standard in high-end digital cameras, | Full AI computing units |

| Depth Sensing | Separate lidar sensors | Integrated depth mapping | Advanced 3D scene understanding |

| Power Efficiency | Moderate improvements | 50% reduction in power needs | Ultra-low power with selective activation |

| Market Adoption | High-end devices only | Mid-range consumer devices | Standard in most imaging devices |

Coming soon, I can almost guarantee it, stacked sensors will soon work with AI, revolutionizing how we use digital cameras. Your camera will understand what it sees. It will recognize scenes, identify objects, and adjust settings automatically.

In professional use, these changes will make work easier. Cameras will tag subjects, adjust settings, and even suggest better shots. All this will be thanks to the built-in neural processing units.

Adding lidar technology will change augmented reality. Your device will map spaces accurately. This will make virtual objects seem more real in games and design.

As the production costs of these sensors decrease, more phones will include them. Meaning better cameras for everyone. We’ll expect more from our smartphones.

These changes will open up new uses for cameras. Cars will see better, medical imaging will improve, and farming will get more precise.

Cameras will soon do more than just take pictures. They’ll understand what they see. This is a big change in what we think cameras, particularly DSLRs and mirrorless models, can do.

Stacked sensor cameras are a big step forward in digital imaging. They combine many parts into a small space letting them do things that you old digital cameras can’t.

Soon cameras do more than just take pictures. They’ll help self-driving cars see the road better. They can spot people, cars, and dangers fast and accurately.

You might already own a device with these sensors without realizing it. Many top smartphones utility these sensors. They help with rapid focusing and quick photo taking. As technology gets better and cheaper, you’ll see them in more devices.

Even though these sensors are close to their limits, new tech keeps improving. Adding artificial intelligence makes them even smarter.

Whether you love taking photos or just want to know how your camera works, learning about stacked sensor cameras is fascinating. It shows how far we’ve come in capturing and handling digital images.

Stacked sensors have a unique design. They separate light-capturing elements from processing circuitry. This stacked setup allows for faster data handling and better low-light performance.

A stacked sensor camera has several layers. The top layer captures light. The middle layer converts light signals to electrical signals. The bottom layers store data and process it. This setup enables fast data handling.

Stacked sensor cameras are used in many fields. They’re in robotics for quality control and in the automotive industry for autonomous vehicles. They’re also in high-end smartphones and professional cameras. They’re used in security systems, medical imaging, and more.

I think steel wool photography creates some of the most amazing images you can capture

Long exposure photography is a technique that uses slow shutter speeds to capture silky smooth

The first time I went to the Medicine Bowls in Courtenay, it felt like a